Connect with Us

602 Park Point Drive, Suite 225, Golden, CO 80401 – +1 303.495.2073

© 2025 Medical Affairs Professional Society (MAPS). All Rights Reserved Worldwide.

What’s new?

“ChatGPT” is a new natural language processing (NLP) tool developed by OpenAI that has been praised for its humanlike responses and ability to correct and amend in reaction to suggestions. It’s really taking the information world by storm.

Tech giant Microsoft has announced a multi-year, multibillion dollar investment in ChatGPT as it extends its partnership with OpenAI.

However, while ChatGPT has impressed many with its abilities, it has also raised concerns, particularly in the academic and research sectors.

Recently, OpenAI has announced the launch of paid version “ChatGPT Pro,” a professional form expected to offer faster response, more reliable and priority access to new features.

Can a bot become an author for medical content?

ChatGPT has made its formal debut in the scientific literature — racking up at least 4 authorship credits on published papers and preprints.

The Open AI bot was recently part of a research which concluded that the tool was “comfortably within the passing range” of a US medical licensing exam. The model demonstrated a high level of concordance and insight in its explanations, the researchers concluded. This could mean that such Artificial Intelligence models could possibly help with medical education and even clinical decision-making, the recent study mentioned.

In response to recent chaos, The World Association of Medical Editors (WAME) published a position paper on ChatGPT and Chatbots in relation to scholarly publications. It recommends that Chatbots cannot be authors, and the content of the paper is authors’ ownership and responsibility.

My first thought is that like all artificial intelligence technologies, ChatGPT is based on proficient analysis of existing knowledge. However, the model sometimes gives out plausible-sounding but incorrect responses. For the time being, a major limitation is its difficulty formulating research plans and gathering new data.

Has ChatGPT made inroads into Medical Affairs?

ChatGPT or other Chatbot writing assistants can develop content for medical communications and research writings, including disease and medicine summaries, patient information, or lay summaries.

However, it is important to keep in mind that ChatGPT has not yet been specifically trained on medical subjects or for the purpose of medical or life-sciences specific writings.

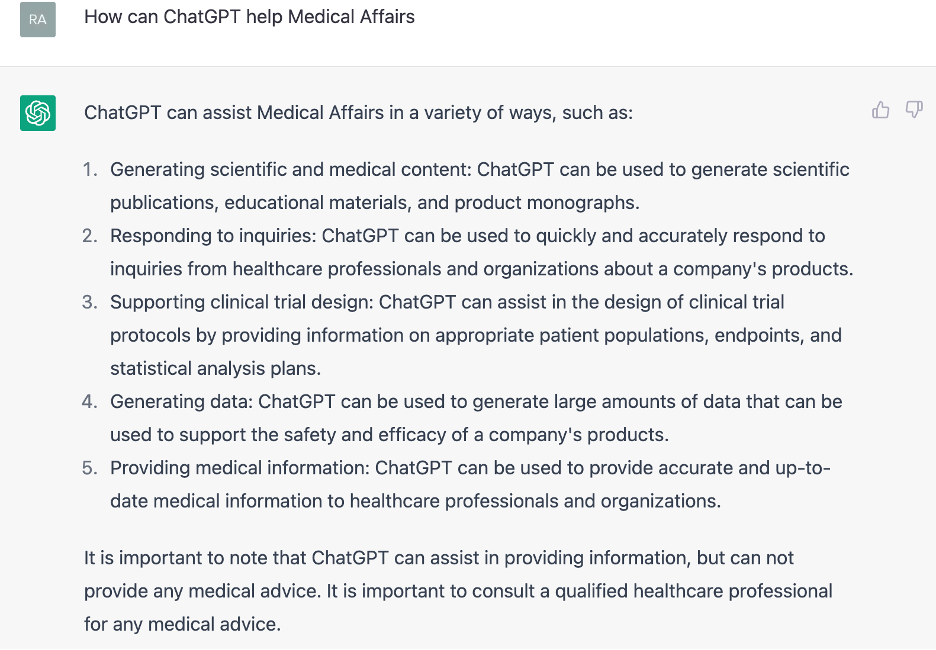

Therefore, when asked “how can ChatGPT help medical affairs?”, it listed out the probable services along with the caution statement of using ‘human intervention’ and ‘verification’ by healthcare expert.

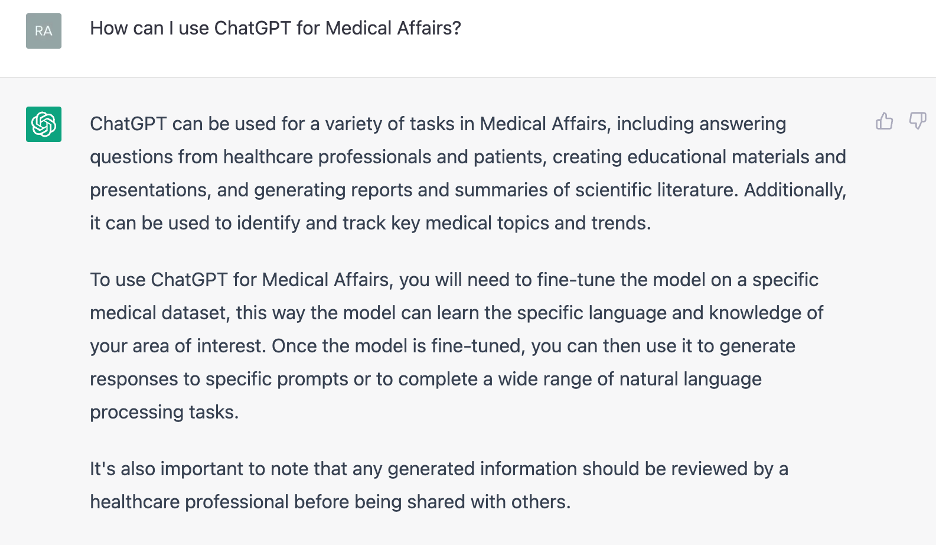

When I tried probing the tool a different way, it suggested to fine-tune the model of a specific medical dataset, which is not yet fully tested methodology for this tool. This and many other tests demonstrate the need for expert review of ChatGPT content.

In my opinion, currently the biggest blind spot in ChatGPT is lack of its ability to provide the source information and cross-referencing in every response to medical queries, which calls into question the authenticity of the information generated by it. Healthcare and clinical practices are based on evidences and these missing links may prevent its immediate use in medical communications.

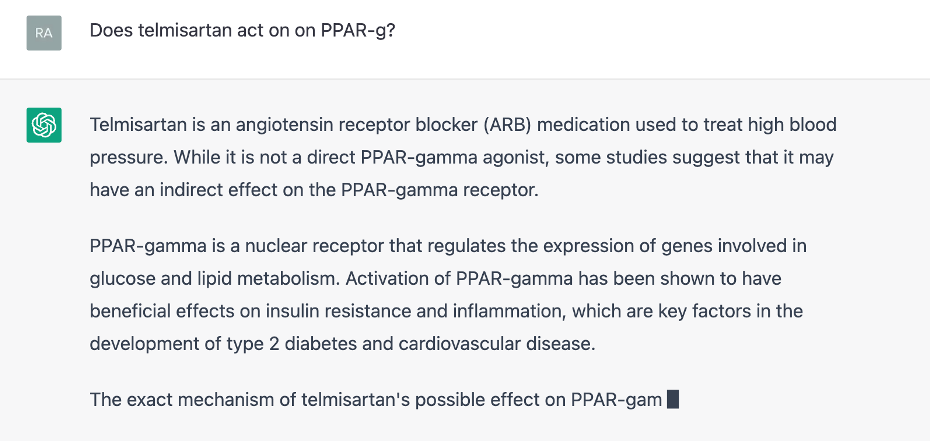

For instance, in the following scenario the information mentioned in response for telmisartan appears to be accurate, but it doesn’t provide any references; while patient care and treatment are mainly offered based on the published evidences. Response generation stopped abruptly at an incomplete stage, which could be worth ignoring(?).

However, ChatGPT can provide the disease and treatment summaries quickly, which can be reviewed, modified, authenticated and used for the specific purpose.

Bots are here and here to stay

Another side of the coin is inevitability: there is no questions that ChatGPT and other Chatbots will arrive in healthcare and across Medical Affairs. Eventually, they are going to transform the medical communication and writing space by delivering tailored information to specific healthcare audiences at a single click, though its involvement will vary between different healthcare fields.

Although, no field will be left untouched, it seems unlikely that ChatGPT and chatbots will replace human beings altogether. Let’s get used to it and leverage the technology to advance Medical Affairs together, which will make this transition easier and will help maintain the ecosystem of future Medical Affairs.

Disclaimer: This piece of information is not generated by Chatbots

The Innovate article series highlights the ideas of Medical Affairs thought leaders from across the biopharmaceutical and MedTech industries. To submit your article for consideration, please contact MAPS Communications Director, Garth Sundem.

602 Park Point Drive, Suite 225, Golden, CO 80401 – +1 303.495.2073

© 2025 Medical Affairs Professional Society (MAPS). All Rights Reserved Worldwide.

Fireside Chat: Lessons in Medical Affairs Excellence and Thoughts for the F...

Fireside Chat: Lessons in Medical Affairs Excellence and Thoughts for the F...